🚗💨👍 Accelerating Delivery and Boosting Satisfaction: Our Code Review Improvement Journey

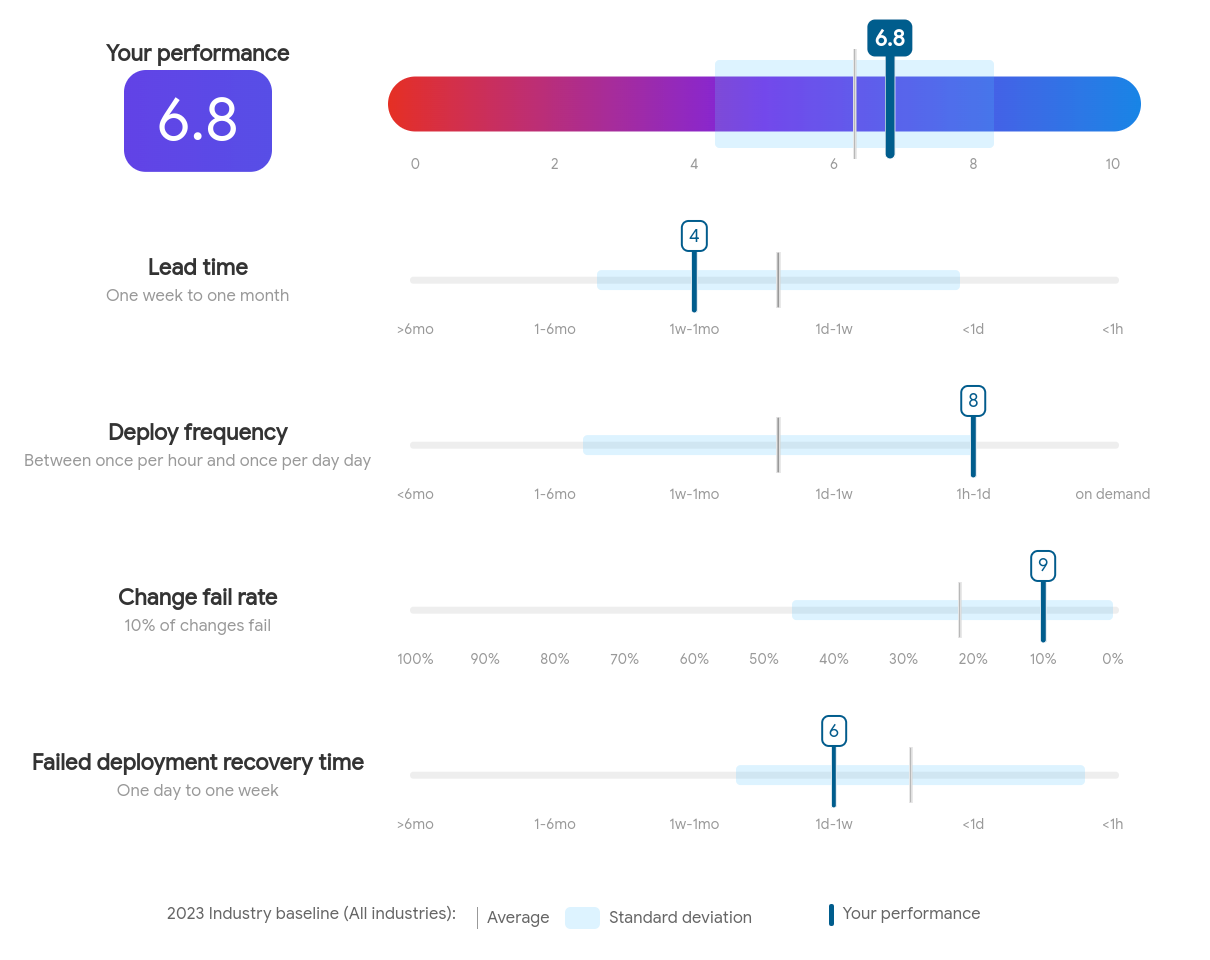

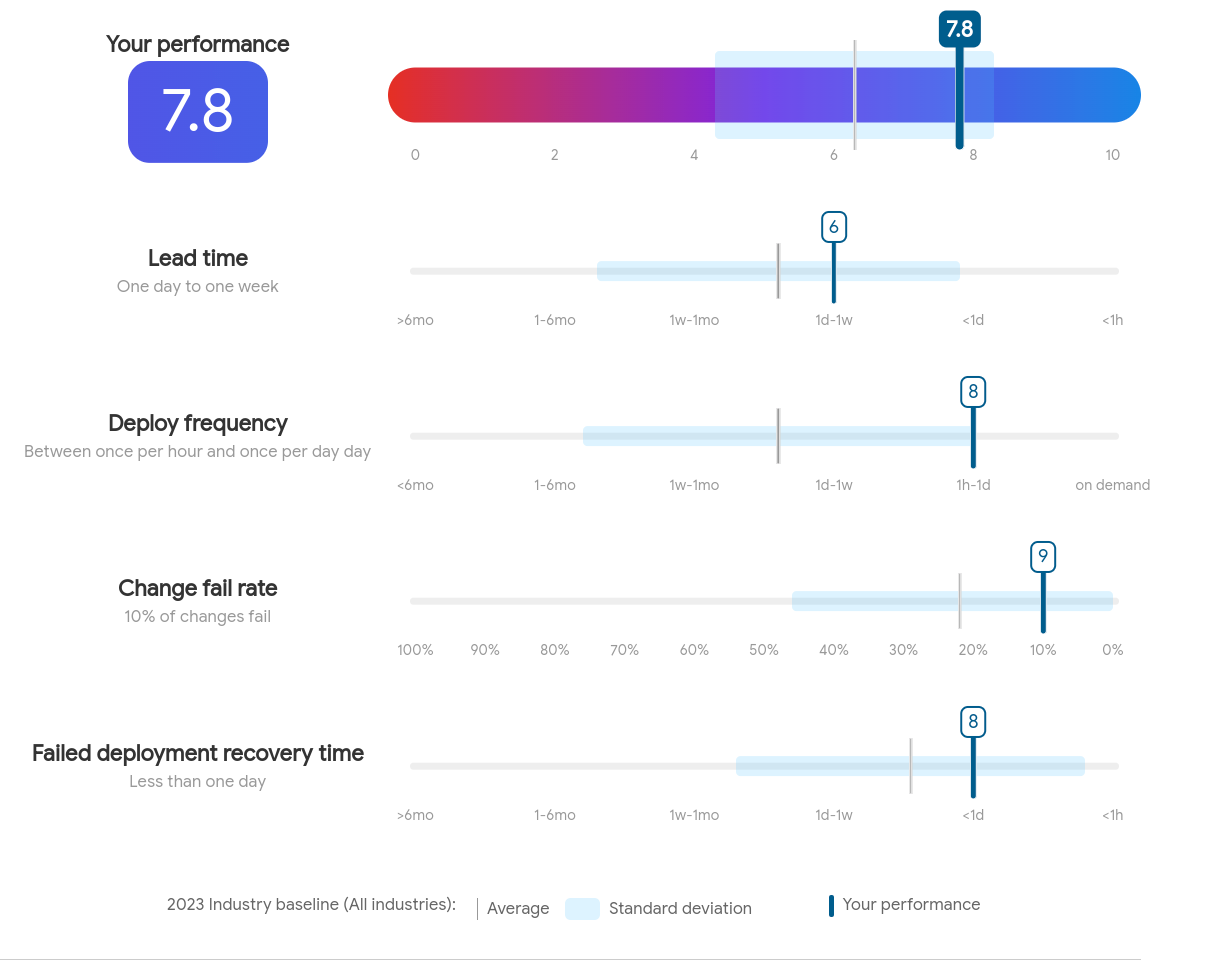

Picture this: a critical bug fix is ready, but it languishes in code review for days. Release schedules slip, customers get frustrated, and developer morale takes a hit. At Cymbal Coffee, we’ve been there and after a recent workshop discussing our DORA performance metrics, we saw a major opportunity: reducing lead time! That’s why we were determined to streamline our software delivery process, and code reviews were the key bottleneck to tackle.

Reading the DORA Accelerate: State of the DevOps 2023 Report inspired a discussion around code review, while it was widely accepted by the team that code reviews are a problem and should be improved the impact of doing so was not fully understood;

“Unlock software delivery performance with faster code reviews: Speeding up code reviews is one of the most effective paths to improving software delivery performance. Teams with faster code reviews have 50% higher software delivery performance.”

That led to joining a DORA community discussion on Code Reviews that provided new insights and perspectives resulting in a deeper dive discussion within the team understanding the questions published on dora.dev and area that could be changed;

- Are peer code reviews embedded in your process?

- How long is the duration between code completion and review?

- What is the average batch size of your code reviews?

- How many teams are involved in your reviews?

- How many different geographical locations are involved in your reviews?

- Does your team improve code quality automation based on code review suggestions?

We decided to optimise this process, and the results were significant! Not only did we improve our efficiency, but we also fostered a stronger learning culture within our team.

So what is a code review?

It’s a quality control process where developers examine each other’s code to catch errors, enhance readability, and spread knowledge. Benefits include:

- Knowledge Sharing: Reduces dependency on single developers and encourages collaboration.

- Improved Code Quality: Catches bugs early and enforces consistency. Code should act as a teacher to future developers.

- Learning Culture: Developers improve by seeing different approaches and teaching others.

- Boosting Well Being: Rapid reviews and constructive feedback can increase developer satisfaction, productivity and decrease burnout.

An interesting finding in DevEx in Action: A study of its tangible impacts found developers that report fast code reviews feel 20% more innovative!

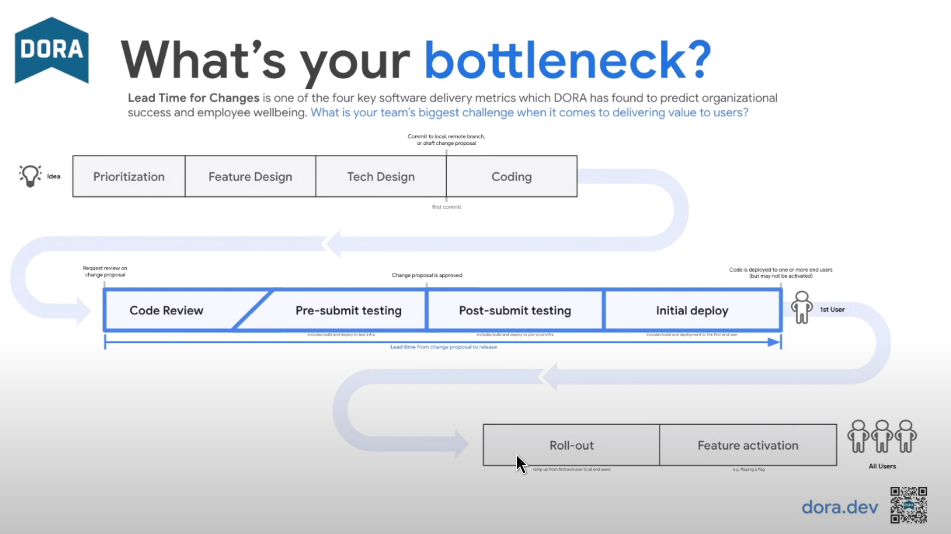

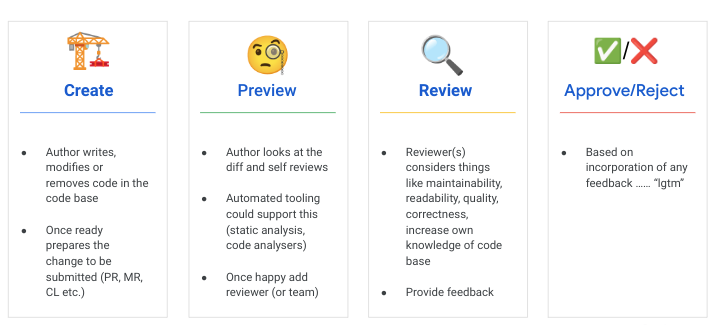

In a typical code review workflow four distinct phases exist;

Stage 0 is making the change itself, that could be new code, modify or remove from the code base. The author should then prepare the change and ensure that they are setting the reviewer(s) up for success by providing clarity and reducing the friction;

- Self-review: Did I write clear commit messages? Does the change make sense on its own? Has the code been cleaned up and relevant documentation been added?

- Tests: Are my changes adequately tested? Does the code compile and pass relevant tests?

- Small Changes: Are my commits focused, making them easier to digest by the reviewer?

The review itself should embrace a generative culture and provide constructive feedback, typically guided by checklists and insights from internal documentation. The creator and review(s) go into the review with the right mindset, knowing why the step exists with empathy towards each other trying to solve the common goal, all with an open and curious mind - it is not a nit-picking exercise.

The focus can cover;

- Functionality: Does the code do what it’s supposed to? Can it be simplified?

- Readability: Is the code well-formatted, commented, and easy to follow? Does it follow design and style consistency? Does the code handle errors correctly?

- Maintainability: Can others easily understand and modify this code in the future? Do more people understand the code base?

- Security: Are there vulnerabilities? No single engineer can commit arbitrary code without oversight

Once any feedback is incorporated and the review has been approved (or rejected) the automated software delivery process takes over and rolls out the changes. Would you like to better understand code review at Google? The following papers go into detail including sharing why it was originally introduced - “to force developers to write code that other developers could understand” (What improves developer productivity at google? code quality, Modern code review: a case study at Google and Engineering Impacts of Anonymous Author Code Review: A Field Experiment).

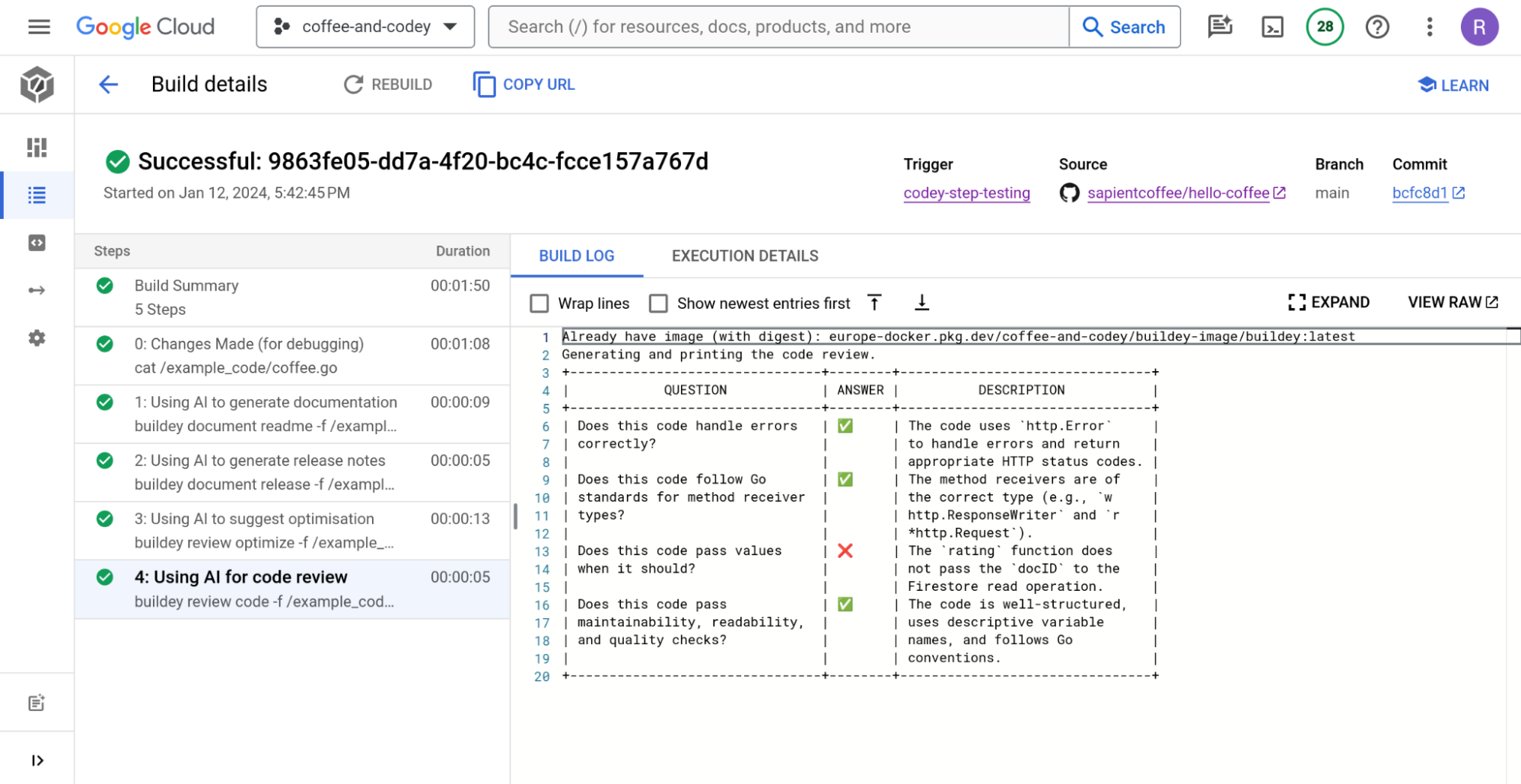

Experiment: GenAI

Now we better understand the why and what of code reviews, what experiments can we run within our team focused on improving the code review process? Could GenAI help with tools like Gemini Code Asssit(originally named DuetAI) or with a platform engineering hat on directly interacting with LLM models like Gemini Pro?

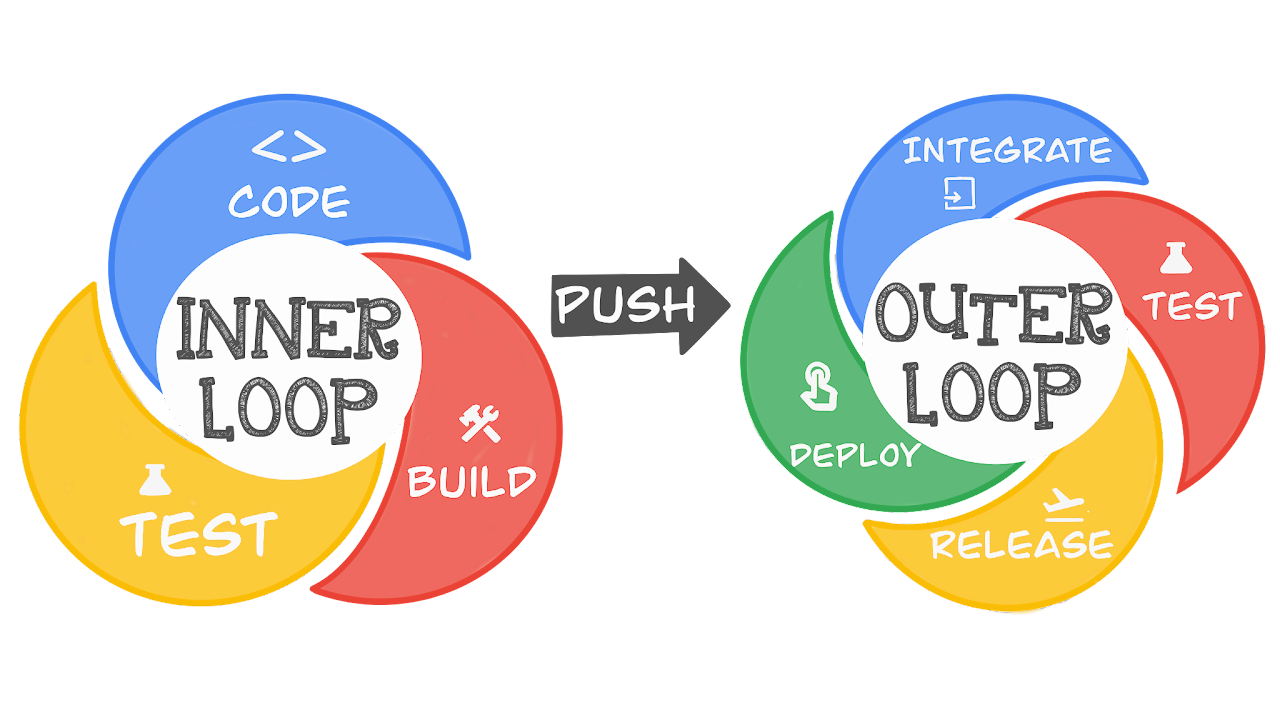

First let’s break this down into the inner loop and outer loop.

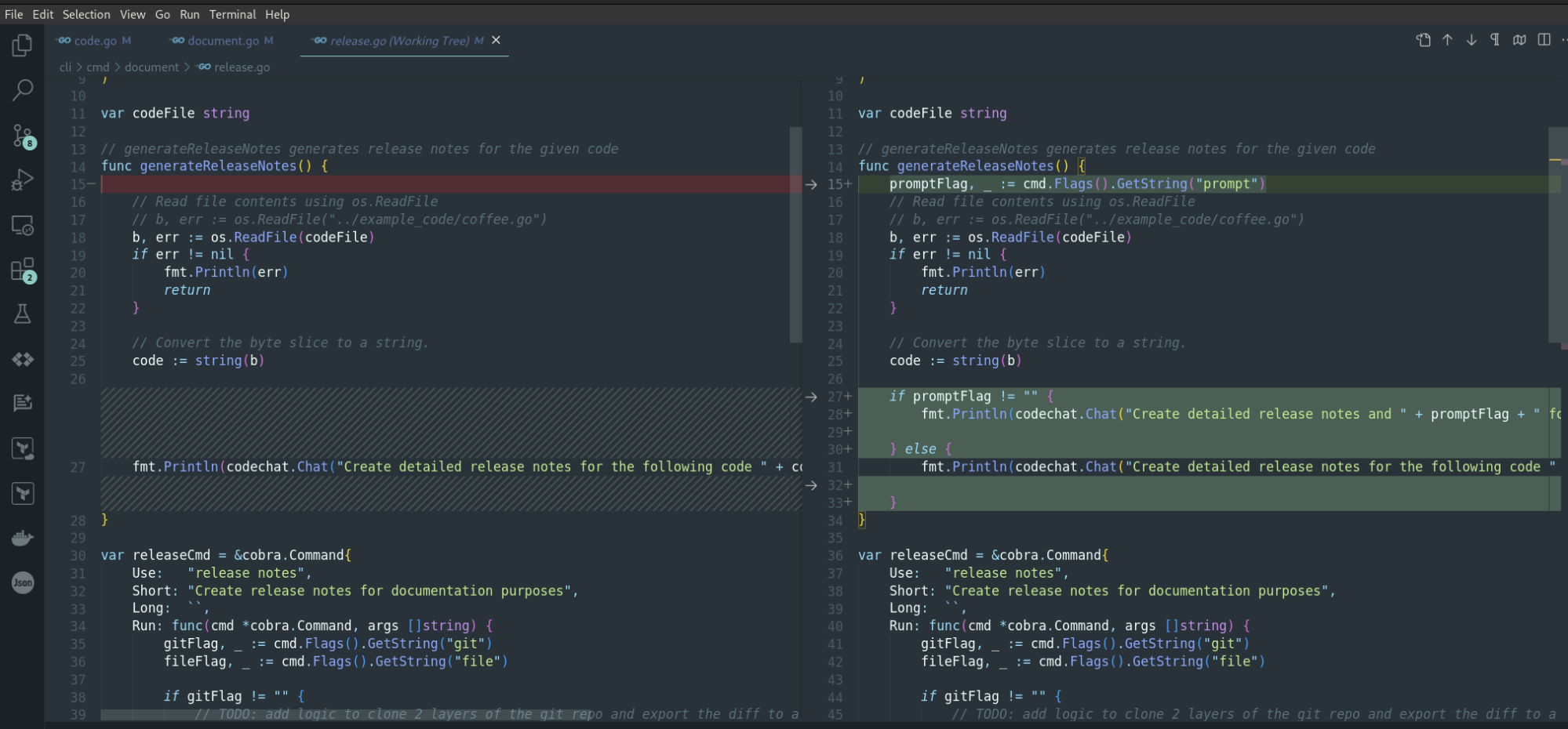

The inner loop in our case would be part of the preperation/preview stage and the IDE is very much the focal point. What are the changes, the git extension and comparing the differences is useful for this;

Wait, you promised me some GenAI! Could Gemini Code Assist as part of Cloud Code help? Some ideas that we decided to experiment with;

- Asking typical code review questions about the code

- Fix identified code review points

- Ask Source controlled code review questions

- Inspiration for code review questions

- Generate meaningful git commit Messages

- Generate git PR/MR descriptions or titles

Asking it various code review questions about the code and also asking for assistance in resolving issues found;

- Does this code have meaningful Doc Comments?

- Fix this for me (if problems exist)

- Does this code handle errors correctly?

- Does this code follow Go standards?

- Does this code pass values it should?

- Does this code pass maintainability, readability and quality checks?

Typing each of these can be time consuming, inconsistent and frustrating! Can the questions be stored in the source control to provide a team or org level approach ….. yes it can. You could add standard questions in a file and then when ready open the file to load the context and use chat to list the questions and compare against the code - “What are the questions in qu.txt” , “Could you do a code review with the questions in qu.md on main.go?”.

What if you do not know what questions to ask in the first place? You could as Code Assist to suggest some; “As a professional code reviewer who understands what good code should look like and how to optimze provide a list of the top 5 questions you would ask about this specific code ? The questions should be able to be answered with yes or no.” “Now review the code with these questions answering yes or no. When you answer no, provide some context to why.”

Can Code Assist help with creating the commit messages and also the PR/MR descriptions? Yes it can. The outer loop is once the change has been pushed to Git, maybe a pull/merge request (PR, MR).

NOTE: The demo code for this can be found here, it was part of a internal talk intoducing

buideyas a CLI tool to help with various thinks; watch this space for a platform engineering post building CLI’s. This will be super seeded by another project calleddevai.

After implementing our changes, we re-evaluated with the DORA quick check. Our lead time decreased substantially! Developers were also more satisfied as feedback cycles shortened and knowledge spread more effectively.

While GenAI and Code Assist can help with some of the “heavy lifting” of code review by helping set the reviewer up for success and also answering the questions that take time allowing the reviewer to focus on adding value to the review. It’s important to say that this will not replace the code review process, knowledge transfer being an important reason, but it could help. A big lesson from this experiment and deserves a post in its own right, is how you best engineer the prompts to send to the LLM.

Call to Action

Ready to improve your code reviews, or your software delivery process generally? Here’s what we recommend:

- Identify: As a cross-functional team discuss the biggest friction points and use DORA to frame the conversation.

- Start Small: Don’t change everything at once.

- Experiment: Understand the problem space and make a small change combined with a hypothesis

- Measure: Track metrics, like review time, to gauge improvements. The DORA four keys can be a helpful guide or the specifics documented in the DORA capabilities.

Let us know how your continual learning and improvement journey unfolds! Why not make a submission to the DevOps Awards.