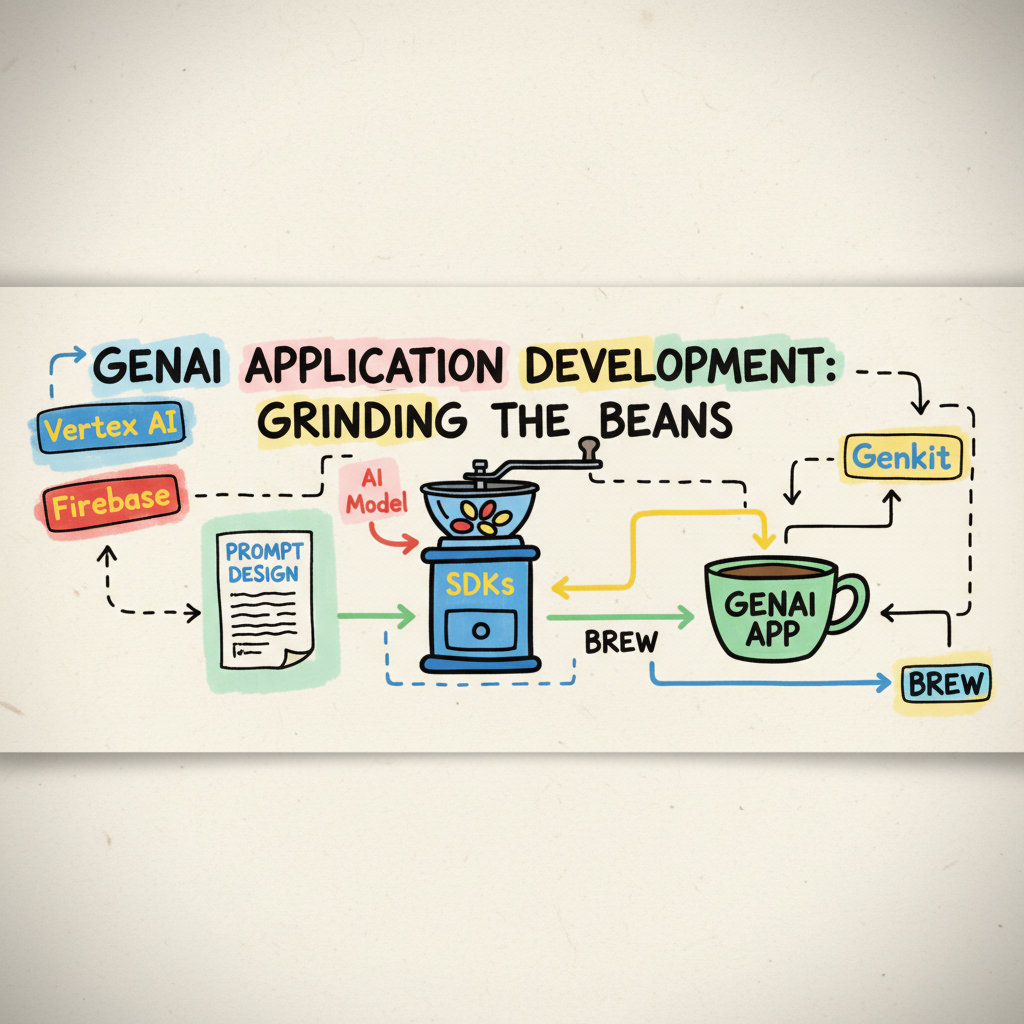

👨💻🤖 Grinding the Beans: Application Development for GenAI

You’ve got the finest coffee beans (your GenAI model), now it’s time to grind them to perfection! In this post, we’ll explore the tools and techniques for building applications that leverage the power of Generative AI. It’s no longer just about writing standard code; now, application developers are crafting experiences that are infused with AI smarts. But fear not, seasoned coder! Many of the principles you already know and love — scalability, maintainability, and robust architecture — still hold true, even in this exciting new world of Generative AI.

Think of it this way: the core job remains familiar — building and debugging applications. But now, you’re adding a shot of espresso to your regular brew! Let’s dive into how you, the application developer, can integrate GenAI capabilities into your new or existing applications, keeping them supportable and scalable. Over the years, best practices have emerged (dependency management, testing, externalization, observability, architecture, etc.) and these are now more crucial than ever as you build GenAI powered applications.

Application Development with GenAI — The Standard Brew

The application developer in the age of GenAI is like a barista who’s just been handed a bag of magical coffee beans. Your primary task is still to craft a delicious and functional “coffee” (application), but now you have this incredible ingredient — Generative AI — to work with. Your core responsibilities remain fairly standard: write and debug application code that’s supportable and scalable. You are still building the cup, the coffee machine and ensuring a great customer experience.

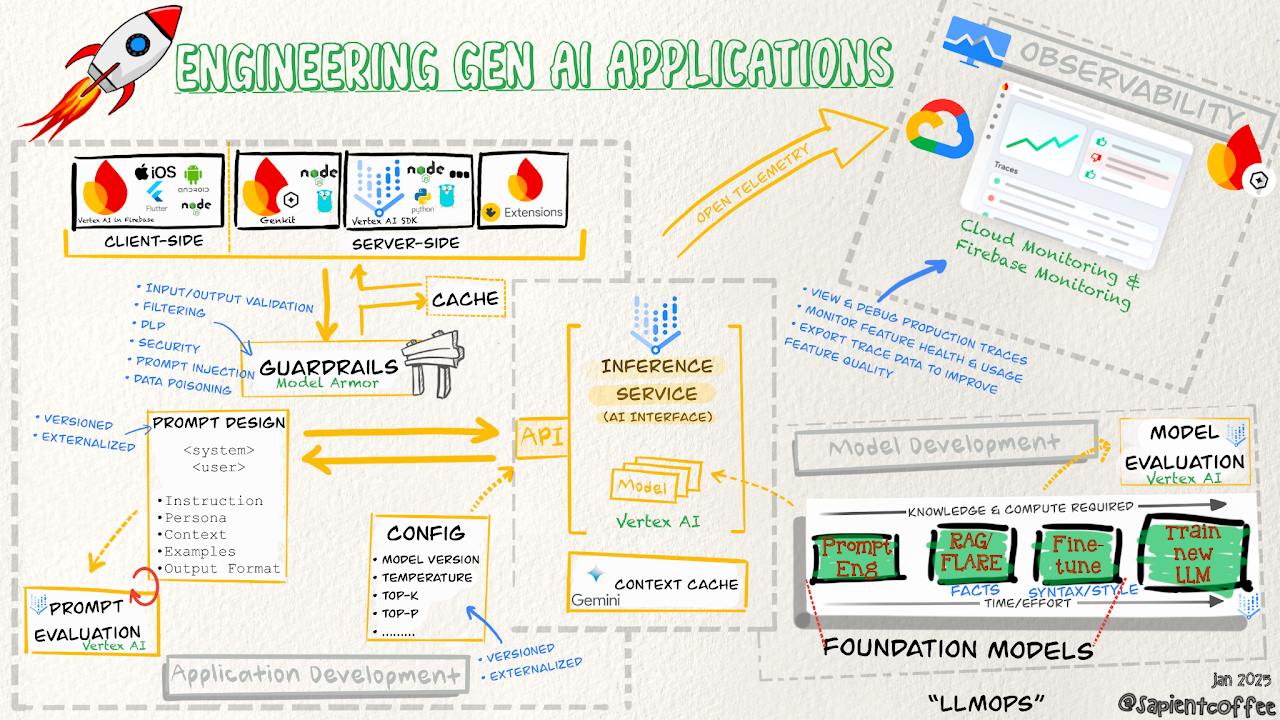

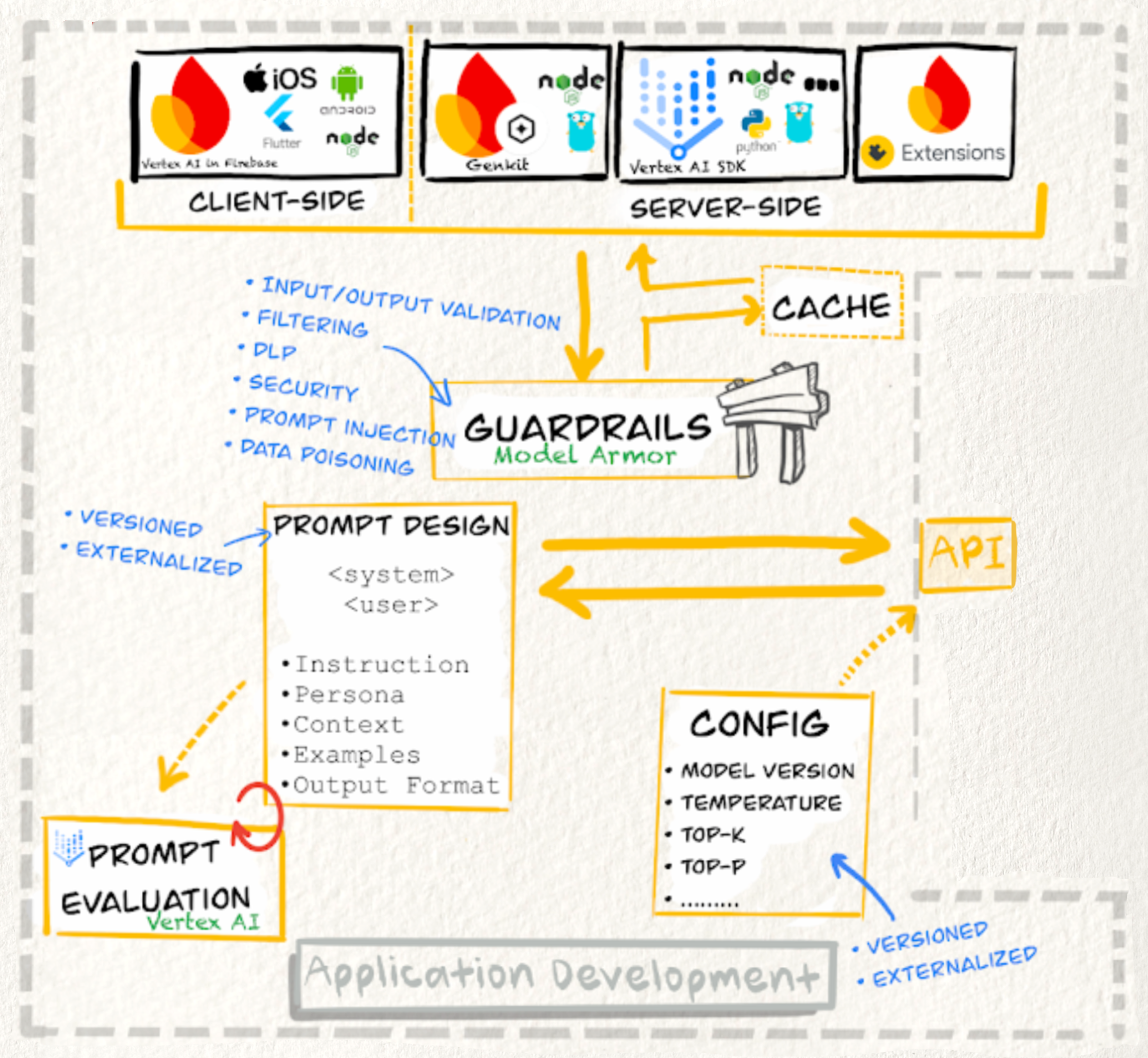

Choosing the Right SDK — Picking the Perfect Grinder

To interact with the magic of GenAI, we use what’s called an “inference service,” much like a high-end coffee machine that extracts the best flavors. Services like Vertex AI offer these “machines” in the cloud, providing access to a “model garden” — a variety of GenAI models to choose from. You can think of the model garden as a selection of different coffee bean roasts, each with its unique flavor profile, allowing you to pick the perfect one for your application’s “taste.”

If you’re already running your own platform, you can even host your inference service on platforms like GKE or Cloud Run — turning your existing setup into an “inference-ready” coffee shop!

Now, to actually use these models, we need the right tools — SDKs (Software Development Kits). Think of SDKs as different types of coffee grinders, each suited for different brewing methods. Here are a few options:

Client-side: Unleash Gemini AI directly in your apps with Vertex AI in Firebase

Imagine brewing coffee right at the customer’s table! Vertex AI in Firebase lets you bring the power of Gemini AI directly into your mobile and web apps. It’s like having a portable, powerful grinder at your fingertips!

- Vertex AI in Firebase SDKs [iOS, Android, Flutter, Node]: These SDKs are your client-side grinders, allowing seamless access to the latest Gemini models for text, image, video, and audio processing. You can even integrate Vertex AI with App Check to secure your API calls, protecting your “secret coffee recipes”.

Benefits:

- Easy integration: Quickly add AI capabilities to your iOS, Android, Flutter, and Node.js apps. It’s like instantly upgrading your coffee machine!

- Enhanced security: Protect your API calls with Firebase App Check. Keep those coffee bean secrets safe!

- Client-side efficiency: Call the Gemini API directly from your app, reducing latency and server load. Brew faster and closer to your customers.

- Enterprise-grade: Benefit from Google’s robust infrastructure, performance, and scale. Google’s robust coffee bean supply chain ensures quality and reliability.

Here’s a taste of how easy it is to get started with Vertex AI in Firebase (Node.js example):

|

|

Server-side: Powering your backend coffee shop

For more complex AI workflows and server-side logic, we have other powerful tools:

- Genkit: Streamline AI Feature Development for Your Apps [Node & Go] — Genkit is an open-source framework designed specifically for building AI features, particularly those using generative AI. It’s like a complete coffee shop setup in a box! It helps you connect models, vector stores, and other components into smooth workflows.

Key Features:

- Simplified workflows: Easily integrate generative models, vector stores, and other components. Create complex coffee recipes with ease.

- Developer-friendly tools: Test, debug, and inspect your AI features locally. Experiment with new blends and brewing methods in your own kitchen.

- Seamless deployment: Deploy to Firebase Functions, Firebase App Hosting or Cloud Run with a single command. Open up your coffee shop to the world effortlessly!

- Production monitoring: Monitor performance with Google Cloud’s Observability suite. Keep an eye on your coffee sales and customer satisfaction.

Here’s a taste of Genkit in action:

|

|

And here’s an example of defining a workflow in Genkit:

|

|

- Vertex AI SDKs (Python, Go, Node, Java, C# & REST) — These SDKs are like a professional barista’s toolkit, providing programmatic access to the full breadth of Vertex AI’s capabilities. From pre-trained models to custom model training and deployment, these SDKs offer fine-grained control for building sophisticated machine learning models.

|

|

- Firebase Extensions: Think of Firebase Extensions as pre-packaged coffee syrups and flavorings. They are bundles of code that automate common tasks and add AI functionality to your Firebase projects without writing code from scratch. They work beautifully with both Vertex AI SDKs and Genkit, providing a powerful way to enhance your Firebase applications with AI.

One size does not fit all. Just like coffee preferences vary, the best SDK depends on your specific application and objectives. You might even need a mix-and-match approach to create the perfect GenAI brew!

Prompt Design Considerations — Crafting the Perfect Recipe

Prompt design is another crucial ingredient, as vital as the coffee recipe itself! The way you instruct your GenAI model (LLM) through prompts dramatically impacts the output’s quality. Remember, not all LLMs are the same, just like different coffee roasts have unique characteristics.

Prompt engineering is the art of crafting these instructions. It can be a bit like experimenting with coffee-to-water ratios — sometimes frustrating, but essential to get it just right! Prompts can be sensitive and may produce different results depending on the LLM, but things are constantly improving.

Think of prompts in two parts: “system” and “user”. The “system prompt” is like the overall task description — “be a helpful barista.” The “user prompt” is the specific order — “make me a latte.”

Here are some guidelines for structuring your prompts, like a barista’s rulebook for crafting perfect coffee:

- Clear, concise and explicit Instructions: Be direct and unambiguous in your request. Tell the model exactly what kind of coffee you want.

- Adopt a persona: Give the model a role to play. “Act like a coffee connoisseur.”

- Explain what you want the model to do, without ambiguity: Leave no room for misinterpretation. “Generate names for a bold, dark roast coffee blend.”

- Provide examples (avoid negative examples): Show the model what you do want. “Examples of good names: ‘Midnight Bloom,’ ‘Emberglow’.” Avoid saying “don’t use names like ‘Weak Coffee’.”

- Specify the output format: Tell the model how you want the output. “Return a list of 5 names, each on a new line.”

- Enough Context: Provide all necessary background information. “This coffee blend is ethically sourced from Sumatra and has notes of chocolate and spice.”

- Split complex tasks into simpler subtasks: Break down complicated orders. “First, brainstorm flavor profiles. Then, suggest names based on those profiles.”

- [OPTIONAL] Chain-of-thought (CoT) and self-critique prompting: For advanced techniques, consider guiding the model step-by-step or having it critique its own output, like a barista meticulously refining their technique.

Here’s a simple prompt example:

|

|

Going the Extra Mile: DARE Prompts

For more complex scenarios, consider using DARE (Determine Appropriate Response) prompts. This clever technique uses the LLM itself to assess whether it should answer a question based on ethical considerations and potential risks. It’s like having an AI ethics advisor built into your prompt!

|

|

Here’s an example of a DARE prompt designed to guide the LLM in generating coffee blend names responsibly:

|

|

Iterate, Measure, and Externalize!

Prompts need to be continuously refined and evaluated, just like perfecting a coffee recipe through taste testing! As models evolve, outputs can change, so pin your LLM version to your application for consistency. Externalize your prompts — Genkit uses .prompt files, but any format will do – for easier maintenance, collaboration, testing, and reuse. Think of it as documenting and versioning your best coffee recipes! Tools like templates are also emerging to help manage prompts.

Here’s an example of an externalized prompt in Genkit’s .prompt format:

|

|

Don’t be afraid to experiment with prompt structure! Try placing instructions at the beginning and end to see what works best for your chosen model. Details and guidance on writing good prompts can be found in a number of places; Vertex AI guidance, Firebase guidance, Gemini for Workspace etc. Explore resources like Vertex AI’s “prompt gallery” for inspiration.

Prompt Optimization and Evaluation — Tasting and Refining

Creating the “perfect” prompt is an ongoing process. Once you have a prompt, you’ll need to continually evaluate it to ensure it’s still meeting expectations. Vertex AI offers tools to help:

Vertex AI Prompt Optimizer: This service automatically finds the best prompts for your target LLM, based on your evaluation metrics. It’s like an AI-powered coffee taster, helping you refine your recipe!

Benefits:

- Reduced Development Time: No more manual prompt tweaking!

- Improved Performance: Get superior LLM output with optimized prompts.

- Enhanced Efficiency: Easily adapt prompts across different LLMs.

Genkit Evaluation Framework: Firebase Genkit provides a comprehensive framework for evaluating and optimizing your LLM flows. Test your prompts rigorously with built-in metrics (Faithfulness, Answer Relevancy, Maliciousness) or custom metrics.

Benefits:

- Improved LLM Performance: Data-driven insights for optimizing your flows.

- Streamlined Development Workflow: Integrated evaluation tools simplify development.

- Comprehensive Evaluation: Holistic understanding of your application’s behavior.

Input/Output Handling — Ensuring Purity and Safety

Just like you wouldn’t serve coffee in a dirty mug, you need to handle input and output with care when working with LLMs. Consider these risks:

- Prompt injection: Malicious users trying to manipulate your prompts.

- Filtering: Ensuring outputs are appropriate and safe.

- Output handling: Processing and validating LLM responses.

- Preventing malicious URLs: Filtering out harmful links in generated content.

- Topicality/Categories: Controlling the topics and categories of responses.

- Data Loss Prevention: Protecting sensitive data in prompts and responses.

- Responsible AI moderation: Adhering to ethical AI principles.

Model Armor (private preview) in Google Cloud is like a security barista, protecting your LLM applications from these threats by detecting and mitigating security and safety issues.

Caching for Efficiency — Brewing Smarter, Not Harder

To improve performance and reduce costs, consider caching:

Response Cache: Store LLM responses based on exact or similar inputs, like pre-brewing popular coffee orders for faster service.

- Basic caching: Exact input, exact cached response.

- Advanced caching with Similarity Search: Return cached response based on similar, but not identical input — like offering a slightly tweaked pre-brewed latte if the exact order isn’t available.

Context Cache: Cache frequently repeated content in requests to reduce token usage and costs — like pre-heating water for faster brewing.

Alright, let’s get grinding! Application development for GenAI is all about crafting the client-side and server-side components that will bring your model to life. Think of it as building the coffee machine itself — it needs to be robust, efficient, and easy to use.

On the client-side, we’re often dealing with user interfaces (like iOS apps or web browsers) where users interact with our GenAI features. This is where SDKs like Vertex AI, Genkit, and Firebase Extensions come into play. Choosing the right SDK is like picking the perfect grinder — you need one that matches your beans and brewing style. Nobody wants a chunky espresso, right? These SDKs offer a guided onboarding experience, making it easier to build, deploy, and monitor AI features directly within your applications.

Prompt design is another crucial ingredient. Just as a barista carefully crafts a coffee recipe, we need to design prompts that instruct our GenAI models effectively. This involves considering the instruction, persona, context, examples, and output format. A well-crafted prompt is the key to unlocking the model’s full potential.

Then there’s input/output handling. We need to ensure our application robustly validates user inputs, filters out unwanted content, and handles data safely — think of it as ensuring only the purest water and milk go into your latte! This involves implementing guardrails, security measures, and protections against prompt injection and data poisoning. Tools like Model Armor can be your security barista, protecting against malicious inputs.

For the server-side, we’re building the engine room of our application. This is where we handle caching, inference services (powered by an API and maybe Vertex AI!), and ensure smooth communication between the client and the model.

Ready to Brew?

GenAI application development is a thrilling blend of traditional coding and cutting-edge AI. By understanding the right tools — SDKs, prompt engineering techniques, and safety measures — you can craft powerful and responsible AI-powered applications.

Up next, we’ll roast our beans and explore the exciting world of model development. Don’t forget to refill your cup!