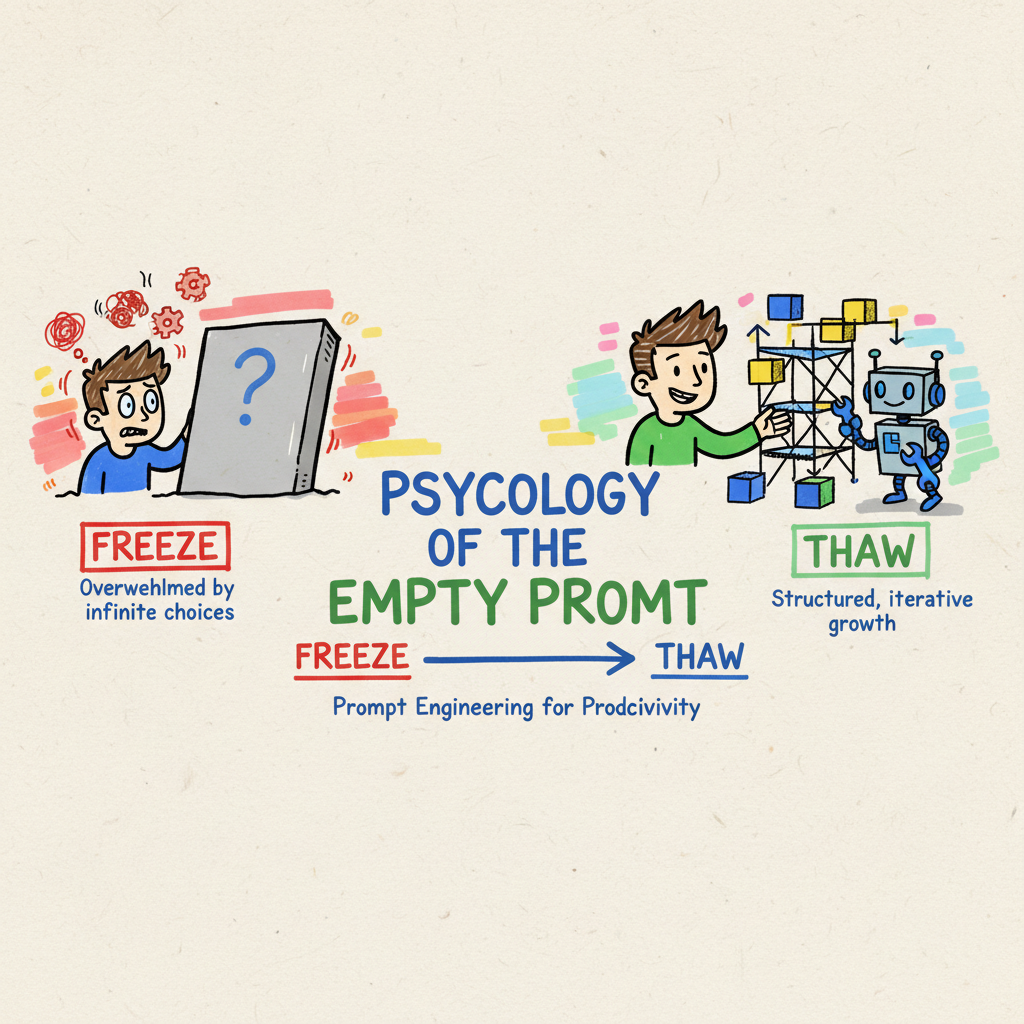

🧠🧊 The Psychology of the Empty Prompt: Why We Freeze & How to Thaw

🧊 The Freeze

Picture this. You’ve got a fresh cup of coffee—maybe a nice Ethiopian Yirgacheffe dripping with floral notes. You sit down, crack your knuckles, and open up your IDE. You’ve got access to the most powerful AI models humanity has ever built. Gemini 1.5 Pro is blinking at you, ready to ingest a million tokens of context and judge your every keystroke.

And you just… freeze.

The cursor blinks. You blink back. You type “How do I…” and then backspace. You try again. “Write a function that…” Backspace.

It’s the Blank Page Syndrome, but for the AI era. And let me tell you, it’s not just for novelists anymore. It’s hitting us developers hard. We’re used to syntax errors and stack traces—concrete problems with concrete solutions. But an open text box? That’s an infinite void of possibility, and frankly, it’s terrifying.

Why does this happen? It’s a mix of Perfectionism (“If I don’t ask this perfectly, the code will be rubbish”) and the Paradox of Choice. When you can ask anything, deciding what to ask becomes a massive cognitive load. It’s like trying to order off a menu with 10,000 items when you haven’t even had your morning brew yet.

🧙♂️ The “Perfect Prompt” Fallacy

Here’s the trap we fall into: we treat the AI prompt like a compiler instruction.

In traditional coding, if you get the syntax 99% right, it fails. It’s binary. So we’ve trained our brains to think that we need to craft the Perfect Prompt—a magic spell that, if incanted correctly, will summon the exact code we need, bug-free, first try.

We spend 20 minutes engineering a “Mega-Prompt” with forty constraints, three personas, and a partridge in a pear tree. We turn into “Prompt Engineers” instead of software engineers.

But here’s the truth, and it might sting a bit: The Perfect Prompt is a myth.

LLMs are probabilistic, not deterministic. They are stochastic parrots—brilliant, well-read parrots, but parrots nonetheless. Even if you use the exact same prompt twice, you might get different results. Chasing perfection in a probabilistic system is like trying to get the exact same espresso extraction time every single morning. There are too many variables. Humidity, bean age, grind size—or in the AI’s case, temperature settings and random seeds.

Focusing on the “perfect shot” leads to Analysis Paralysis. We over-engineer the input (the “Kitchen Sink” prompt) and get frustrated when the output isn’t telepathically accurate.

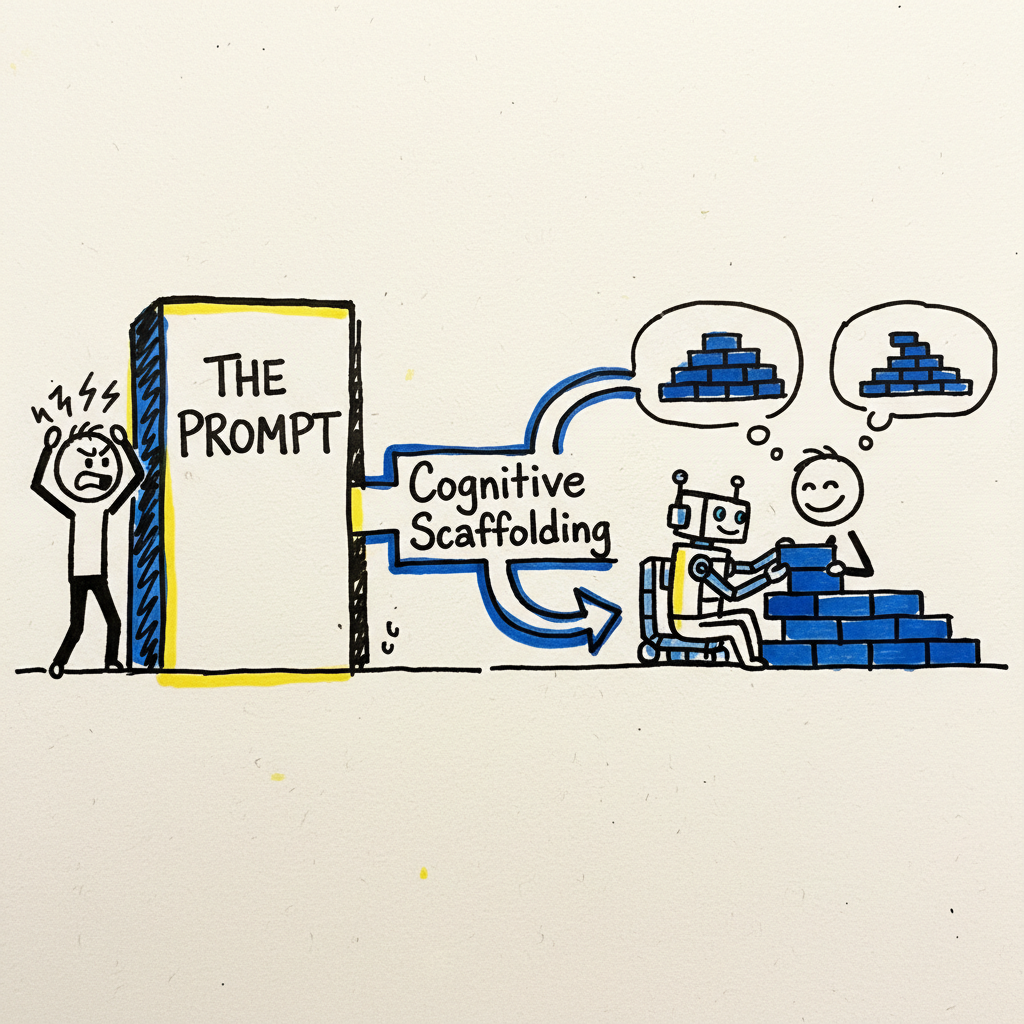

🏗️ Cognitive Scaffolding: The Antidote

So, how do we thaw out? We need to borrow a concept from educational psychology: Cognitive Scaffolding.

Lev Vygotsky, a Soviet psychologist, came up with this ages ago. The idea is that you give a learner a temporary structure (a scaffold) to help them reach a higher level of understanding, then you slowly remove it.

In the context of AI, we need to use the model to build the scaffold for us.

Just as scaffolding supports a building while it goes up, the AI supports your working memory while you structure the problem. It holds the context so you don’t have to.

Stop treating the AI as a code dispenser. Start treating it as a Cognitive Partner. Think of it less like a compiler and more like that senior engineer you grab for a rubber-ducking session. You don’t walk up to them and recite a 400-word spec without taking a breath. You have a conversation.

This shift from “Command Line Argument” (Input -> Output) to “Pair Programmer” (Dialogue -> Solution) is the key.

One powerful technique here is Chain-of-Thought (CoT) prompting. Instead of asking for the solution, ask the AI to plan the solution.

Bad: “Write a Python script to scrape this website.”

Better: “I need to scrape data from this site. Can you outline the steps we’d need to take, considering anti-bot protections and data structure?”

You’re forcing the AI to show its working out. You’re building a scaffold.

🔄 Actionable Frameworks: The Iterative Approach

The cure for the freeze is Iterative Prompting (⚠️ NOTICE: Link is dead/broken). Lower the stakes. Your first prompt doesn’t need to be perfect; it just needs to be a conversation starter. It can be “sloppy.” It can be a vague gesture in the general direction of the problem.

Here are two frameworks to help structure that first “sloppy” attempt so you don’t feel like you’re shouting into the void.

1. The “Five S” Model 🖐️

This is a cracking little framework for getting started without the faff.

- Set the Scene: Who is the AI? What is the context? (“You are a Senior Platform Engineer…”)

- Specify the Task: What do you actually want? (“I need to migrate this pipeline…”)

- Simplify Language: Don’t use jargon unless you have to. Plain English. LLMs get confused by ambiguity just like humans do.

- Structure the Response: How do you want it? (“Give me a bulleted list and then the YAML…”)

- Share Feedback: This is the loop. (“That’s good, but make it more secure…”)

2. The “IDEA” Model 💡

If “Five S” feels a bit too much like a safety briefing, try IDEA:

- I - Intent: What is the goal?

- D - Details: What constraints matter? (Language, framework, region).

- E - Examples: Give it a “few-shot” example of what good looks like. (This is “Few-Shot Prompting” in disguise).

- A - Adjustments: Iterate based on the output.

A Practical Example

Let’s see this in action. I want to create a regex (I know, I know) to validate a UK postcode.

The “Freeze” Prompt:

Staring at screen… “Regex for UK postcode.” Deletes. “Write a perfect regex that covers all edge cases for UK postcodes including BFPO.” Deletes.

The Iterative approach:

Me: “Mate, I need to validate some UK postcodes in Python. Can you explain the general structure of a postcode first?”

Gemini: Breaks down the Outward (e.g., ‘SW1A’) and Inward (e.g., ‘1AA’) codes.

Me: “Grand. Now, give me a basic regex that catches 90% of them. Don’t worry about the weird edge cases yet.”

Gemini:

^[A-Z]{1,2}[0-9][A-Z0-9]? ?[0-9][A-Z]{2}$Me: “Nice one. Now, can we tighten it up to handle the London special cases like WC1A?”

See the difference? I didn’t need to know the answer to start. I just needed to start the conversation.

☕ Conclusion: Embracing the Imperfect

The goal of using AI isn’t to produce a masterpiece in one shot. It’s to make progress.

The “Freeze” happens when we treat the chat window like a test we haven’t studied for. But it’s not a test. It’s a collaborative workspace. It’s a whiteboard session with a colleague who has read the entire internet but has zero common sense.

So next time you’re staring at that blinking cursor, feeling the imposter syndrome creeping in like cold coffee, remember: It doesn’t have to be perfect. It just has to be a start.

Throw in a “sloppy” prompt. Ask a dumb question. Ask it to help you think, not just type.

Your Challenge: The next time you open Gemini, don’t try to be a Prompt Engineer. Just say “Hello” and tell it what you’re stuck on.

Now, I’m off for a proper brew. This code won’t write itself (well, it might, but you know what I mean).