🎰🧠 The Neuro-Coder (Part 1): The Slot Machine in Your IDE

This is Part 1 of The Neuro-Coder, a series exploring the psychological impact of AI on software engineering.

Picture this. You’re deep in the code mines. You hit Ctrl+I (or invoke Gemini

Code Assist in VS Code), type a vague instruction like “Refactor this to use a

reducer,” and hit Enter.

The cursor blinks. A stream of green text cascades down the screen. It’s fast. It’s hypnotic. And for a split second, you feel a little buzz.

It works! (Mostly). You hit Tab. You feel like a wizard. You feel 10x productive.

But are you? Or are you just… stimulated?

I’ve been looking at the behavioral psychology behind our rapid adoption of AI—or at least the factors that could be impacting our adoption, both positively and negatively. The deeper I dug, the more I realized that we haven’t just upgraded our tools; we’ve fundamentally rewired our reward circuits.

We haven’t just installed a plugin. We’ve installed a Slot Machine.

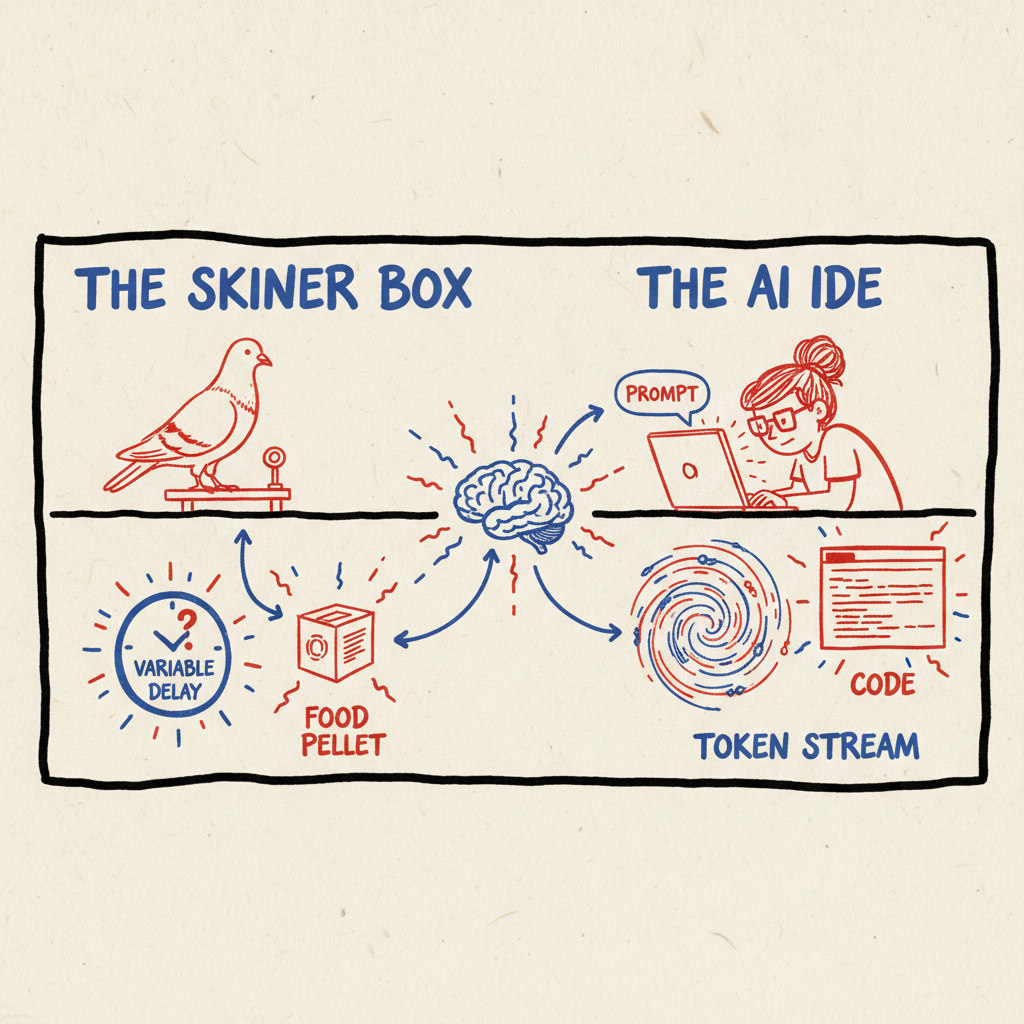

🏗️ The Digital Skinner Box

To understand why “Vibe Coding” feels so addictive, we have to look at the work of B.F. Skinner. In the mid-20th century, Skinner put pigeons in boxes and gave them a lever.

- Scenario A: Press lever -> Get food. Every time. (Fixed Ratio).

- Scenario B: Press lever -> Get food sometimes. Maybe after 1 press, maybe after 20. (Variable Ratio).

Skinner found that Scenario B drove the pigeons wild. They pressed the lever obsessively, even when the food stopped coming. This Variable Ratio (VR) Schedule is the mathematical backbone of every casino slot machine on the planet.

And now, it’s the backbone of your IDE.

The Mechanism

Look at the interaction loop of an AI coding assistant:

- The Trigger: You see a problem or a blank line.

- The Action: You type a prompt (The Lever Pull). It’s low effort.

- The Wait: The tokens stream in (The Spinning Reels). This latency is crucial—it builds anticipation.

- The Reward:

- The Jackpot: Perfect code. dopamine spike!

- The Loss: Total hallucination.

- The Near Miss: It compiles but has a logic bug.

In traditional coding, the feedback loop is largely Deterministic. If I

type print("Hello"), it prints “Hello.”

Now, seasoned engineers will argue: “Wait, what about debugging a race condition? That’s not deterministic!”

You’re right. Manual debugging is the original Variable Ratio schedule. You

don’t know if the next console.log will reveal the bug. But there is a

critical difference: Effort.

Manual debugging requires intense, focused cognitive labor. The dopamine reward at the end is earned. In AI coding, the reward is unearned. You pull the lever with a low-effort prompt, and the AI delivers a “windfall” of code. This low-friction, high-variability loop is the precise architectural blueprint of the slot machine.

🧪 The Chemistry of “The Buzz”

Why does this uncertainty make it addictive? It’s down to Reward Prediction Error (RPE).

Neuroscience tells us that dopamine isn’t just a “pleasure molecule”; it’s a learning molecule. It spikes when reality exceeds our expectations.

- Manual Coding: You write a loop. It works. Your brain expected it to work. RPE = 0. No buzz.

- AI Coding: You type “make it faster.” The AI rewrites the entire algorithm in O(n) time. You didn’t expect that level of output for that level of effort. RPE = High. Massive dopamine spike.

We aren’t addicted to the utility of the AI. We are addicted to the surprise of the output. We are chasing the “windfall” of getting complex work done for free.

🎣 The “Near Miss” Trap

This is where it gets dangerous. In gambling, a “Near Miss” (two cherries and a lemon) activates the same reward pathways as a Win. It tells the brain, “You’re so close! Try again!”

In AI coding, a Deceptive Hallucination is a Near Miss.

If the AI generates garbage, that’s just a Loss. But when the code looks right—the syntax is perfect, it imports the right libraries, but it calls a function that doesn’t quite exist—that is a Near Miss.

A rational engineer would stop, read the docs, and fix it. But the “Neuro-Coder” brain sees a Near Miss. It says, “Just regenerate. Tweak the prompt. It was 99% there.”

So we enter the Regeneration Loop. We spend 20 minutes prompting and re-prompting, chasing the loss, when we could have written the code manually in 5 minutes. We are gambling with our time, hoping the next pull of the lever hits the jackpot.

🧠 The Counter-Argument: Is it Addiction or Just Laziness?

Now, a neuroscientist might look at this and argue: “This isn’t gambling addiction; it’s energy conservation.”

And they would have a point. The brain hates burning calories. Thinking is metabolically expensive. Prompting is cheap.

There is a strong body of research supporting the idea of Automation Complacency. When we prompt an AI, our brain often enters a state of hypo-arousal (low vigilance). We aren’t chasing a “high”; we are avoiding the “pain” of cognitive load (Negative Reinforcement).

The dopamine spike might not be Reward; it might be Relief.

Research into Human-AI Teaming, such as studies on “Automation Bias”, suggests that developers report feeling “buzzed” and productive even when empirical data shows they are merely trading cognitive load for review time. This is Effort Justification. We confuse the speed of text generation with the speed of problem solving.

So, whether it’s the thrill of the slot machine or the relief of the snooze button, the result is the same: we are handing over the wheel.

☕ The Takeaway: Recognition

I’m not saying “Don’t use AI.” I use it every day. But we need to use it with Metacognition (thinking about thinking).

Next time you find yourself hitting “Regenerate” for the third time, stop. Ask yourself:

“Am I engineering a solution, or am I just pulling the lever?”

If you’re pulling the lever, step away. Pour a coffee. Write the code yourself. Break the loop.

In Part 2, we’ll look at the cognitive biases that keep us trapped in this loop, specifically the “Sunk Cost Fallacy” of prompt engineering.

🔬 The Hypothesis & The Request

This post proposes the following hypothesis:

Hypothesis: The modern AI-assisted IDE functions as a “Digital Skinner Box,” utilizing Variable Ratio reinforcement schedules to drive compulsive usage. The primary driver of engagement is often the anticipation of the windfall (Reward Prediction Error) rather than the objective utility of the output.

Research Question I’d Love to See Answered:

Does the brain activity of a developer “prompting” an AI resemble the neural signature of problem-solving (flow) or the neural signature of gambling (anticipation)? We need fMRI comparisons of “manual coding” vs. “prompt-and-wait” cycles.

📚 Further Reading & Research

If you want to geek out on the science behind this, here are the papers that informed this post:

- Reward Prediction Error: Dopaminergic signalling of uncertainty and the aetiology of gambling addiction (Zack et al., 2020). Explains why uncertainty drives dopamine.

- Variable Ratio Schedules: Chronic exposure to a gambling-like schedule… (NIH). The classic Skinner Box research showing why unpredictable rewards create persistent behavior.

- The Near Miss Effect: Journal of Behavioral Addictions. Understanding why “almost winning” triggers the same reward pathways as a win.

- Perception vs. Reality: Expectation vs. Experience: Evaluating the Usability of Code Generation Tools (Vaithilingam et al., 2022). Empirically proving that developers feel more productive with AI but often produce code with more bugs.

- Cognitive Offloading: AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking (Gerlich, Societies 2025). A study of 666 participants revealing a significant negative correlation between frequent AI usage and critical thinking skills, mediated by the brain’s tendency to offload cognitive effort.