📉☕ The Neuro-Coder (Part 3): The Dopamine Gap (The Reality Check)

This is Part 3 of The Neuro-Coder. In Part 2, we looked at the biases that trap us. Now, we look at the data that exposes the cost.

“AI makes me 10x faster.”

We’ve all said it. We’ve all felt it. You prompt, the code appears, and you feel like you’ve just sprinted a marathon in a Ferrari.

But feelings aren’t facts. And when we look at the actual data emerging from rigorous studies in 2025, a disturbing picture emerges. We aren’t speeding up. In many complex tasks, we are slowing down.

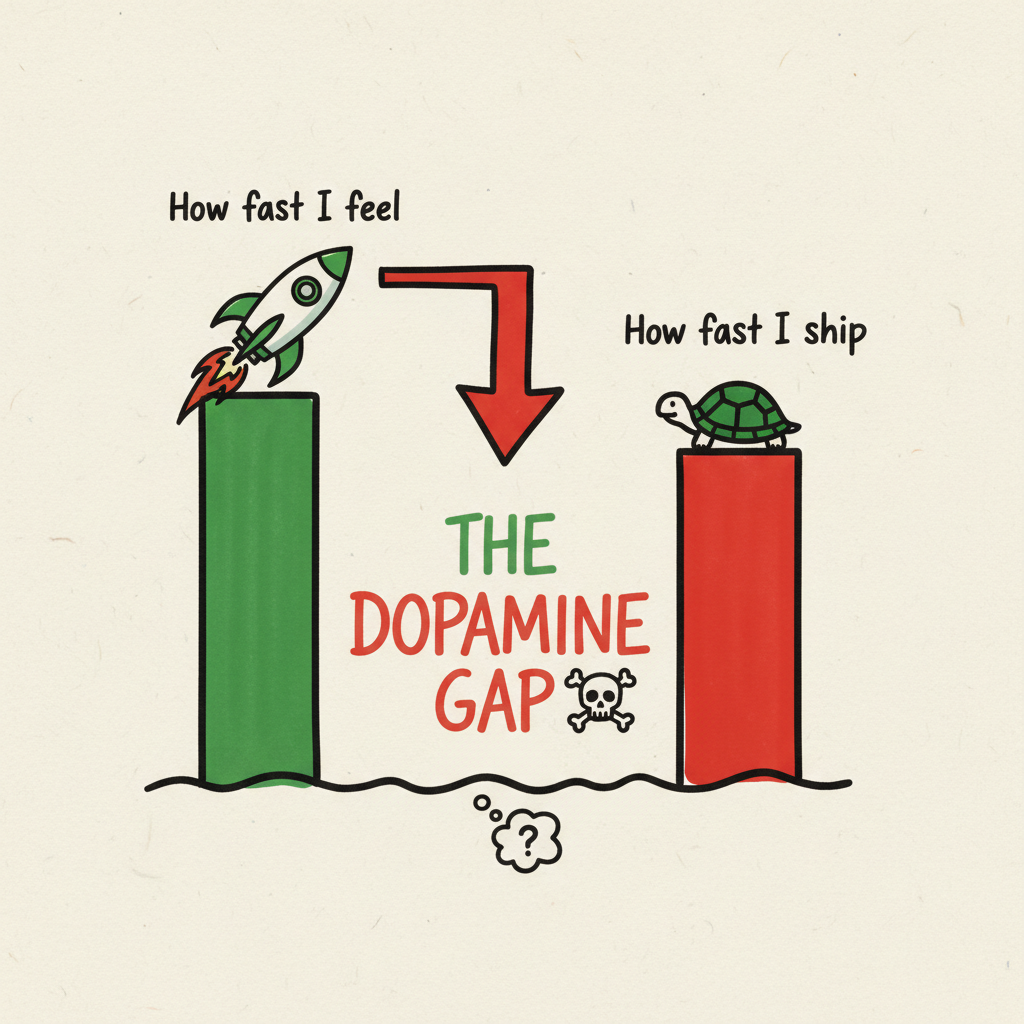

We are suffering from the Dopamine Gap.

📊 The 39-Point Delusion

The most damning evidence comes from the METR (Model Evaluation & Threat Research) Randomized Controlled Trial. They took 16 experienced open-source developers (not juniors, experienced engineers) and gave them complex, real-world coding tasks using Frontier models and modern AI coding agents.

They measured three things:

- Prediction: How much faster did they think they would be? (+24%)

- Perception: How much faster did they feel afterwards? (+20%)

- Reality: How much faster were they actually?

-19%.

They were slower.

That is a 39-point delta between perception (+20%) and reality (-19%). This is the Dopamine Gap.

The crucial detail? This slowdown happened on complex, real-world tasks.

For simple boilerplate or a standalone regex, AI is an undeniable speedup. But as soon as you move into the messy reality of production systems—where logic is distributed and edge cases are everywhere—the developers felt fast because they were generating code rapidly, but they were shipping slowly because they spent hours paying the “Verification Tax.”

⚖️ The “Verification Tax” (The 70/30 Inversion)

Why does this happen? It’s because AI fundamentally inverts the physics of software engineering.

The Old Way (Manual):

- Thinking (80%): Designing the logic, understanding the system.

- Typing (20%): The mechanical act of syntax.

The AI Way:

- Prompting (10%): “Write a function that…”

- Generation (1%): Whoosh.

- Verification (89%): Reading, debugging, testing, fixing.

AI automates the “easy” 20% instantly. That’s the dopamine hit. But it creates a massive new category of work: Verification Labor.

Here is the kicker: It’s not just reading; it’s “Cold-Start Simulation.”

When you write code manually, you build a “Mental Model” of the state changes incrementally. You simulate the logic line-by-line as you type.

When AI generates code, it arrives fully formed. To verify it, you must load the entire state machine into your Working Memory at once to simulate the logic flow. This Cold-Start is metabolically expensive. It is Alien Code. You didn’t build the mental model; you have to reverse-engineer it. This is Extraneous Cognitive Load—effort that just burns you out.

💸 The Visual Placebo (The Fluency Heuristic)

So why do we feel faster?

Cognitive science calls this the Fluency Heuristic. When information is processed quickly and looks clean (perfect syntax), our brain automatically categorizes it as “True.”

We judge task duration by the “Active Phase” (typing/prompting). When the screen fills with code in 2 seconds, our brain anchors to that timestamp. “Task Done!”

The Blank Page Trap: Proponents argue AI solves the “Blank Page Problem” (activation energy). This is true. But it shifts the curve: Easy Start, Brutal Finish. It solves the Emotional friction of starting but increases the Cognitive friction of finishing.

☕ The Takeaway: The 90/10 Rule

This doesn’t mean “Don’t use AI.” It means “Don’t trust your feelings.”

Adopt the 90/10 Rule:

If the AI does 90% of the work in 10 seconds, assume the last 10% (verification) will take 90% of the time.

Adjust your estimates. Adjust your expectations. And when you feel that rush of speed, remember: you haven’t crossed the finish line. You’ve just arrived at the starting line of the debugging marathon.

In Part 4, we’ll look at the human cost of this dynamic. We’ll explore how this “Super-Stimulus” disproportionately affects developers with ADHD and how it is creating a generation of “Zombie Juniors” who can generate code but can’t understand it.

🔬 The Hypothesis & The Request

This post proposes the following hypothesis:

Hypothesis: There is a quantifiable disconnect between Perceived Productivity and Actual Performance. This “Dopamine Gap” is driven by the fact that reading/verifying AI code imposes a higher Extraneous Cognitive Load than writing it.

Research Question I’d Love to See Answered:

Eye-tracking studies on code review. Do developers visually scan AI-generated code differently than human-written code? Do we skip critical logic blocks due to the “Visual Placebo” effect?

📚 Further Reading

- METR Study: Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity. The definitive RCT showing the discrepancy between perceived and actual speed.

- The Uplevel Study: Can GenAI Actually Improve Developer Productivity?. Finding that despite AI adoption, cycle time didn’t improve and burnout risk increased.

- The “Beacon” Trap: An Eye-tracking Study on the Role of Scan Time (Sharif et al., 2012). Research showing that experts rely on visual “beacons” (familiar patterns) to judge code quality quickly. Because AI generates perfect beacons, it hacks this heuristic, causing experts to skim over critical logic errors.

- Cognitive Offloading: AI Tools in Society: Impacts on Cognitive Offloading (MDPI). Examining how offloading tasks affects critical thinking and retention.