I have been looking to show how UCSD can integrate with various tools and their API’s, I had an idea for Cisco Spark (collaboration tool) a while back and have been waiting for the publication of the Rest API. Well in December the API was released. It should be notes that I have also written this up in the Cisco Communities site - https://communities.cisco.com/docs/DOC-64423

As part of a UK&I TechHuddle I decided to create some workflows that would add people to Spark rooms and post messages (including pictures) to the room. I did a write up here.

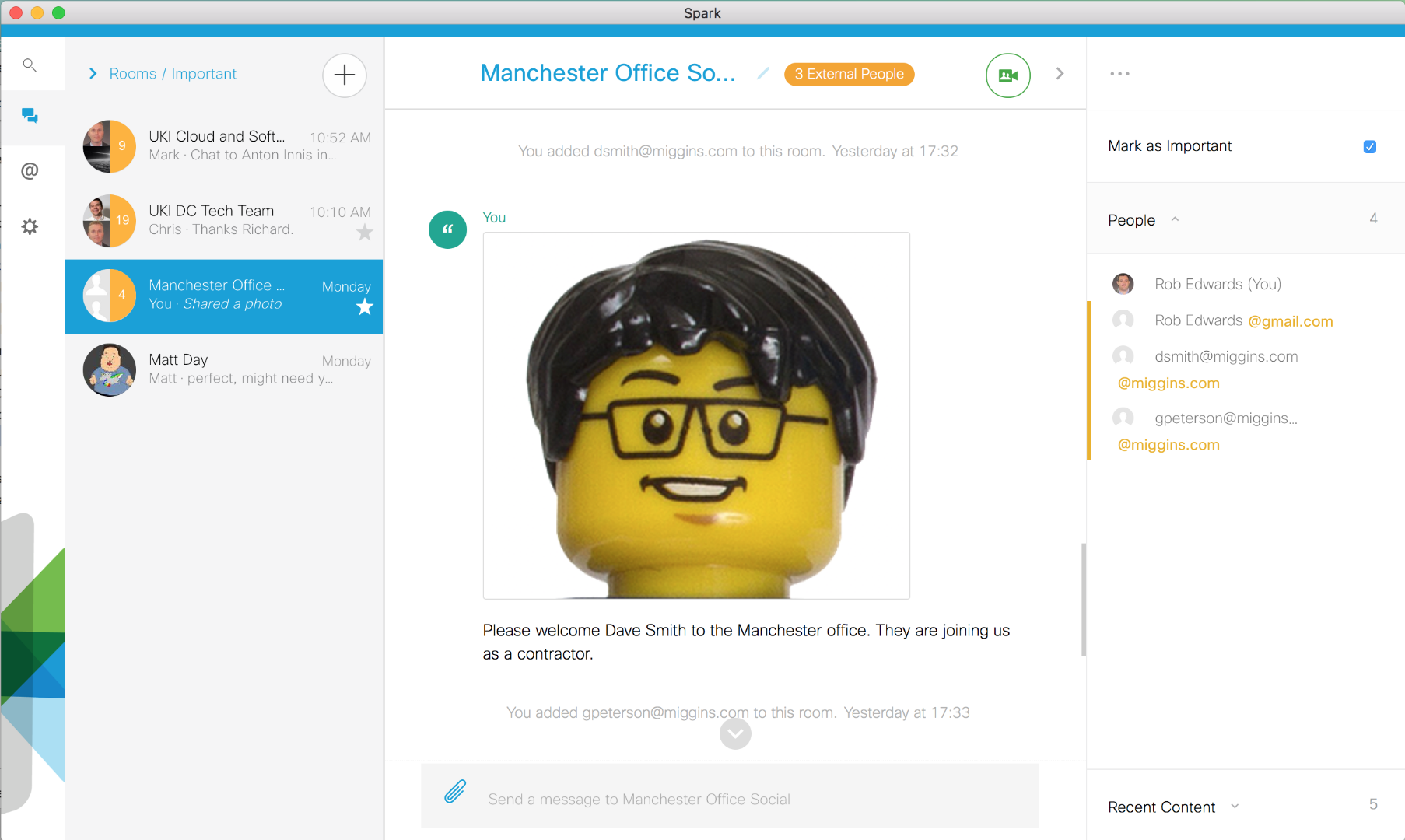

If you look at the following screen shot you will see in the Spark chat client a user ‘dsmith@miggins.com’ has been added to the room and then a message (including an image) posted straight after.

The following video shows some of the integration examples from an end-user perspective.

The example workflow attached comes with 2 custom tasks;

- Spark_Membership_Create

- Spark_Message_Post

If you want more information about the Spark API I would highly recommend the documentation at https://developer.ciscospark.com/index.html

The Spark_Membership_Create task will add a user to a specific spark group. The inputs required are;

| Input | Description |

|---|---|

| token | Spark API Token for authentication reasons |

| member email | The email address of the person you want to add to the Spark room |

| spark_room_id | The ID of the Spark room. |

| file | The file you want to post with the message |

| proxy server [optional] | The address of your proxy server if UCSD is sat behind a proxy |

| proxy port [optional] | The port of the proxy |

importPackage(java.util);

importPackage(java.lang);

importPackage(java.io);

importPackage(com.cloupia.lib.util);

importPackage(com.cloupia.model.cIM);

importPackage(com.cloupia.service.cIM.inframgr);

importPackage(org.apache.commons.httpclient);

importPackage(org.apache.commons.httpclient.cookie);

importPackage(org.apache.commons.httpclient.methods);

importPackage(org.apache.commons.httpclient.auth);

//=================================================================

// Title: Spark_Membership_Create

// Description: This will add a user to a specific spark group

//

// Author: Rob Edwards (@clijockey/robedwa@cisco.com)

// Date: 18/12/2015

// Version: 0.1

// Dependencies:

// Limitations/issues:

//=================================================================

// Inputs

var token = input.token;

var fqdn = "api.ciscospark.com";

var member = input.member;

var roomId = input.roomId;

// Build up the URI

var primaryTaskPort = "443";

var primaryTaskUri = "/v1/memberships";

// Request Parameters to be passed

var primaryTaskData = "{\"roomId\" : \""+roomId+"\",\

\"personEmail\" : \""+member+"\", \

\"isModerator\" : false}";

// Main code start

// Perform primary task

logger.addInfo("Request to https://"+fqdn+":"+primaryTaskPort+primaryTaskUri);

logger.addInfo("Sending payload: "+primaryTaskData);

var proxy_host = "someproxy.com";

var proxy_port = "80";

var taskClient = new HttpClient();

if (proxy_host != null) {

taskClient.getHostConfiguration().setProxy(proxy_host, proxy_port);

}

taskClient.getHostConfiguration().setHost(fqdn, primaryTaskPort, "https");

taskClient.getParams().setCookiePolicy("default");

taskMethod = new PostMethod(primaryTaskUri);

taskMethod.setRequestEntity(new StringRequestEntity(primaryTaskData));

taskMethod.addRequestHeader("Content-Type", "application/json");

taskMethod.addRequestHeader("Accept", "application/json");

taskMethod.addRequestHeader("Authorization", token);

taskClient.executeMethod(taskMethod);

// Check status code once again and fail task if necessary.

statuscode = taskMethod.getStatusCode();

resp=taskMethod.getResponseBodyAsString();

logger.addInfo("Response received: "+resp);

// Process returned status codes

if (statuscode == 400) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": The request was invalid or cannot be otherwise served. An accompanying error message will explain further.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 401) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": Authentication credentials were missing or incorrect.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 403) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": The request is understood, but it has been refused or access is not allowed.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 404) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": The URI requested is invalid or the resource requested, such as a user, does not exist. Also returned when the requested format is not supported by the requested method.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 409) {

logger.addWarn("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": The request could not be processed because it conflicts with some established rule of the system. For example, a person may not be added to a room more than once.");

logger.addInfo("Response received: "+resp);

} else if (statuscode == 500) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": Something went wrong on the server.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 501) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": Server is overloaded with requests. Try again later.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else {

logger.addInfo("All looks good. HTTP response code: "+statuscode);

}

taskMethod.releaseConnection();

The Spark_Message_Post task will post a message to a specific spark group. The inputs required are;

| Input | Description |

|---|---|

| token | Spark API Token for authentication reasons |

| message | Message to post to Spark |

| spark_room_id | The ID of the spark room you want to post into |

| file | The file you want to post with the message |

| proxy [optional] | As my UCSD instance sits behind a poxy I need to communicate via it, as a result need to specific in the CloupiaScript. |

| proxy port [optional] | The port of the proxy |

importPackage(java.util);

importPackage(java.lang);

importPackage(java.io);

importPackage(com.cloupia.lib.util);

importPackage(com.cloupia.model.cIM);

importPackage(com.cloupia.service.cIM.inframgr);

importPackage(org.apache.commons.httpclient);

importPackage(org.apache.commons.httpclient.cookie);

importPackage(org.apache.commons.httpclient.methods);

importPackage(org.apache.commons.httpclient.auth);

//=================================================================

// Title: Spark_messages_post

// Description: This will post a message to a specific spark group

//

// Author: Rob Edwards (@clijockey/robedwa@cisco.com)

// Date: 18/12/2015

// Version: 0.1

// Dependencies:

// Limitations/issues: Only works with UCS Director version 5.3 and below.

//=================================================================

// Inputs

var token = input.token;

var fqdn = "api.ciscospark.com";

var message = input.message;

var file = input.file;

var roomId = input.roomId;

var proxy_host = "someproxy.com";

var proxy_port = "80";

// Build up the URI

var primaryTaskPort = "443";

var primaryTaskUri = "/v1/messages";

// Data to be passed

var primaryTaskData = "{\"roomId\" : \""+roomId+"\",\

\"file\" : \""+file+"\", \

\"text\" : \""+message+"\"}";

// Main code start

// Perform primary task

logger.addInfo("Request to https://"+fqdn+":"+primaryTaskPort+primaryTaskUri);

logger.addInfo("Sending payload: "+primaryTaskData);

var taskClient = new HttpClient();

if (proxy_host != null) {

taskClient.getHostConfiguration().setProxy(proxy_host, proxy_port);

}

taskClient.getHostConfiguration().setHost(fqdn, primaryTaskPort, "https");

taskClient.getParams().setCookiePolicy("default");

taskMethod = new PostMethod(primaryTaskUri);

taskMethod.setRequestEntity(new StringRequestEntity(primaryTaskData));

taskMethod.addRequestHeader("Content-Type", "application/json");

taskMethod.addRequestHeader("Accept", "application/json");

taskMethod.addRequestHeader("Authorization", token);

taskClient.executeMethod(taskMethod);

// Check status code once again and fail task if necessary.

statuscode = taskMethod.getStatusCode();

resp=taskMethod.getResponseBodyAsString();

logger.addInfo("Response received: "+resp);

if (statuscode == 400) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": The request was invalid or cannot be otherwise served. An accompanying error message will explain further.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 401) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": Authentication credentials were missing or incorrect.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 403) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": The request is understood, but it has been refused or access is not allowed.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 404) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": The URI requested is invalid or the resource requested, such as a user, does not exist. Also returned when the requested format is not supported by the requested method.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 409) {

logger.addWarn("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": The request could not be processed because it conflicts with some established rule of the system. For example, a person may not be added to a room more than once.");

logger.addInfo("Response received: "+resp);

} else if (statuscode == 500) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": Something went wrong on the server.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else if (statuscode == 501) {

logger.addError("Failed to configure Spark. HTTP response code: "+statuscode);

logger.addInfo("Return code "+statuscode+": Server is overloaded with requests. Try again later.");

logger.addInfo("Response received: "+resp);

// Set this task as failed.

ctxt.setFailed("Request failed.");

} else {

logger.addInfo("All looks good. HTTP response code: "+statuscode);

}

taskMethod.releaseConnection();

The example workflow will first add the user and then post a message;

An example input (you will need to obtain your own API key by following the instructions);

At the moment they don’t have any roll-back functionality and limited to posting messages and adding people to groups. I plan to built upon these when I get some more time.

The CloupiaScripts are also stored on my GitHub repo (probably updated more often than will be here) - https://github.com/clijockey/UCS-Director/tree/master/CloupiaScript

The next Spark project will be to create a ‘chatbot’ so that I can make use of the UCSD API as well and request items. Although this is a bit bigger and will require some thought.

I am also in the process of updating for UCS Director 5.4 including some additional functionality and tidier code.